Five Technical SEO Checks in a Website Audit

By Brian Harnish

When performing a website audit, it can be easy to gloss over certain crucial technical items when a process is not in place to execute it. Things like technical SEO, your website’s backlink profile, and content audits are all important topics to consider when identifying weaknesses in a website’s SEO. The following includes five crucial issues that should be identified and fixed in an on-site website SEO audit.

When performing a website audit, it can be easy to gloss over certain crucial technical items when a process is not in place to execute it. Things like technical SEO, your website’s backlink profile, and content audits are all important topics to consider when identifying weaknesses in a website’s SEO. The following includes five crucial issues that should be identified and fixed in an on-site website SEO audit.

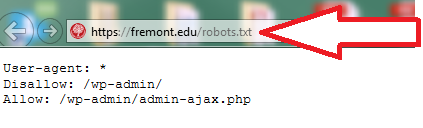

Robots.txt File

Your robots.txt file is responsible for controlling access to things like files, pages, directories, and subdirectories on your website. By disallowing certain pages, you are telling search engines not to crawl them. But, the robots.txt file can introduce conflicts if things are not handled properly. Some things to think about when identifying issues in a robots.txt file include:

1. Make sure your robots.txt file allows search engines to crawl the site.

This is a big one, and it is amazing how often this is a topic in SEO audits. It should be pretty simple, but it is an easy mistake to make. Always make sure the robots.txt file on your website allows full crawling and indexation of the website by including the following line:

Disallow:

This means that the site is fully open for crawling by the search engines.

If you include the following line:

Disallow: /

This means that robots.txt will block all crawlers from your entire site, beginning at the site’s root directory. It is always a good idea to perform this check as part of any SEO audit.

2. Make sure crucial pages and directories are not blocked.

It is very easy to block the wrong directory that may contain many important pages on your site. If your intention is only to block one page, but really you are blocking the wrong directory, this can spell doom especially if you accidentally block an entire directory. To audit this, check the robots.txt tester in Google Search Console. This will help you identify any wrong, blocked directories in your live robots.txt file.

3. Make sure other conflicts are not present.

There can also be syntaxial and other coding issues present in the robots.txt file, which fill further result in actual Disallow coding not being properly executed and read by the search engines. Be sure to examine your robots.txt file for these inconsistencies as well.

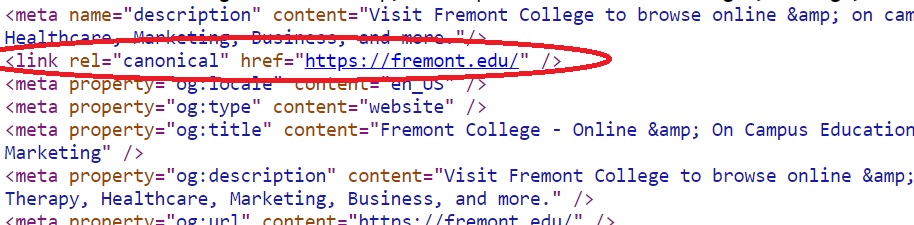

Canonical Issues

Having canonical tags on the page is something that is important. Canonical tags tell Google that that page should be considered the original, authoritative source of content on your website. Having the right canonicals on the page without issues can make or break your SEO. What should you be looking out for?

1. Canonicals without 4xx or 5xx server errors.

Normally, canonicals should be pointing to 200 OK pages that don’t error out. Sometimes, though, website audits do reveal canonical pages that error out. This should be corrected as soon as possible because all this does is confuse Google, and causes Google to not be able to crawl your site.

2. Duplicate canonicals being identified across many different pages.

Canonicals should be referencing the page that they are on. However, there are some instances where duplicate canonicals can happen, especially in cases where website deployments rely on a custom CMS that manages canonicals with custom plug-ins, for example. The crawl of the site should identify these issues quite easily.

To fix these issues: always make sure that 1. Any 4xx or 5xx errors are properly redirected to their main canonical URL, and 2. All canonical URLs reference the page they are on.

SSL Certificate Problems

Normally, one domain name will have one version of that domain: for example, http://www.domainname.com/ that occurs for all pages of a website. In cases of making a website secure or other issues with trailing slashes, some issues can occur.

[contact-form-7 id=”31766″ title=”Be a better person of your self form” html_class=”gray-form”]

SSL Certificate installation problems

When switching a website over to its secure version, usually what happens is that an SSL certificate is purchased, and the website host installs it on the site. When everything is perfect and the certificate is installed correctly, there shouldn’t be any issues impacting the website. But, sometimes, the wrong certificate can be provided. Let’s examine a couple scenarios.

1. The wrong certificate is purchased.

When the right certificate is purchased, all versions of the domain will be redirected to its proper destination. http://, https://, http://www, and https://www will all be redirected properly. In order for proper redirection to all domains to happen, a wildcard SSL certificate should be purchased. This prevents half the site from not being redirected properly.

2. The certificate is installed incorrectly.

It’s rare, but it can happen: the certificate can be installed incorrectly. You could have purchased the correct wild-carded certificate but if it has been installed incorrectly, errors will pop up. The best way to ensure that this does not happen is by having your website host examine the certificate in full, test it, and make sure that they install it correctly.

3. The certificate expires

It can also happen when a website has been up for awhile. It changes hands. Someone can forget that the website has the certificate installed. It expires. Errors all over the place occur. This is where a website audit can come in handy in identifying these types of issues with long-term expired SSL certificates.

Trailing Slash Issues in URLs Leading to Duplicate Content

Trailing slash issues can cause many issues with duplicate URLs that result in duplicate content issues across the site as well. For example, say you have the following setup with trailing slash issues:

https://www.websitename.com/index.html/

https://www.websitename.com/index.htm/

https://www.websitename.com/index/

https://www.websitename.com/index.htm//

When inputting the following into your browser, all of these variations of the page displaying can cause major issues with Google finding duplicate content and being unable to identify the single source of content. It’s like trying to find a needle in a haystack of noisy signals that destroy your page’s standing in the search engines.

To fix this issue, always ensure that the main URL you want Google to see is referenced as the definitive original source of said content as the canonical URL. In addition, all trailing slash variations should be 301 redirected back to the main website’s canonical URL.

301, 302 Redirects, 4xx, 5xx Errors

Normally, a website should operate flawlessly with 200 OK pages that have content. Sometimes, however, errors can occur with pages that go down, links that don’t work, and redirects that don’t redirect to their proper destination. Here are a few situations that can arise that should be kept a close eye on during an audit:

1. 4xx, 5xx errors that have not been redirected

In the day-to-day working environment, it is easy to miss 4xx and 5xx errors that have not been properly redirected. The website audit should successfully uncover any erroring pages that are preventing Google from crawling the entire site. While just a few 4xx or 5xx errors are not a major problem, they can become a major issue when you uncover more than just a few.

On a larger site, many many 4xx or 5xx errors should be cause for concern. In any case, all of these types of errors should be redirected to 200 OK pages by using a 301 redirect. 302 redirects are seldom used, and are only used in cases of temporary redirection. 301 redirects are permanent and should be used to transfer SEO value to the new destination page.

2. Any 200 OK pages that do not have content but actually are 404’ed (soft 404s)

Not every website is setup exactly the way we want or should always be setup in a way that allows us to easily identify all 404s. Thankfully, through the website audit, it is quite easy to identify soft 404s. Soft 404s are basically any page that says “page not found” but is actually presenting itself as a 200 OK page. When you crawl the site in Screaming Frog, just set the filter under Configuration > Custom > Search, enter “page not found” for Filter 1, (contains). This will allow the spider to crawl any pages that are presenting themselves as a 200 OK page, but in reality are soft 404s.

A website is never done. While the above is not a comprehensive list of website audit items that you can take care of in one fell swoop, hopefully this will help you get started on the road towards performing your own comprehensive website audits. Happy auditing!

*In no way does Fremont University promise or guarantee employment or level of income/wages.